AI is coming for your kids and votes. Georgia should act now.

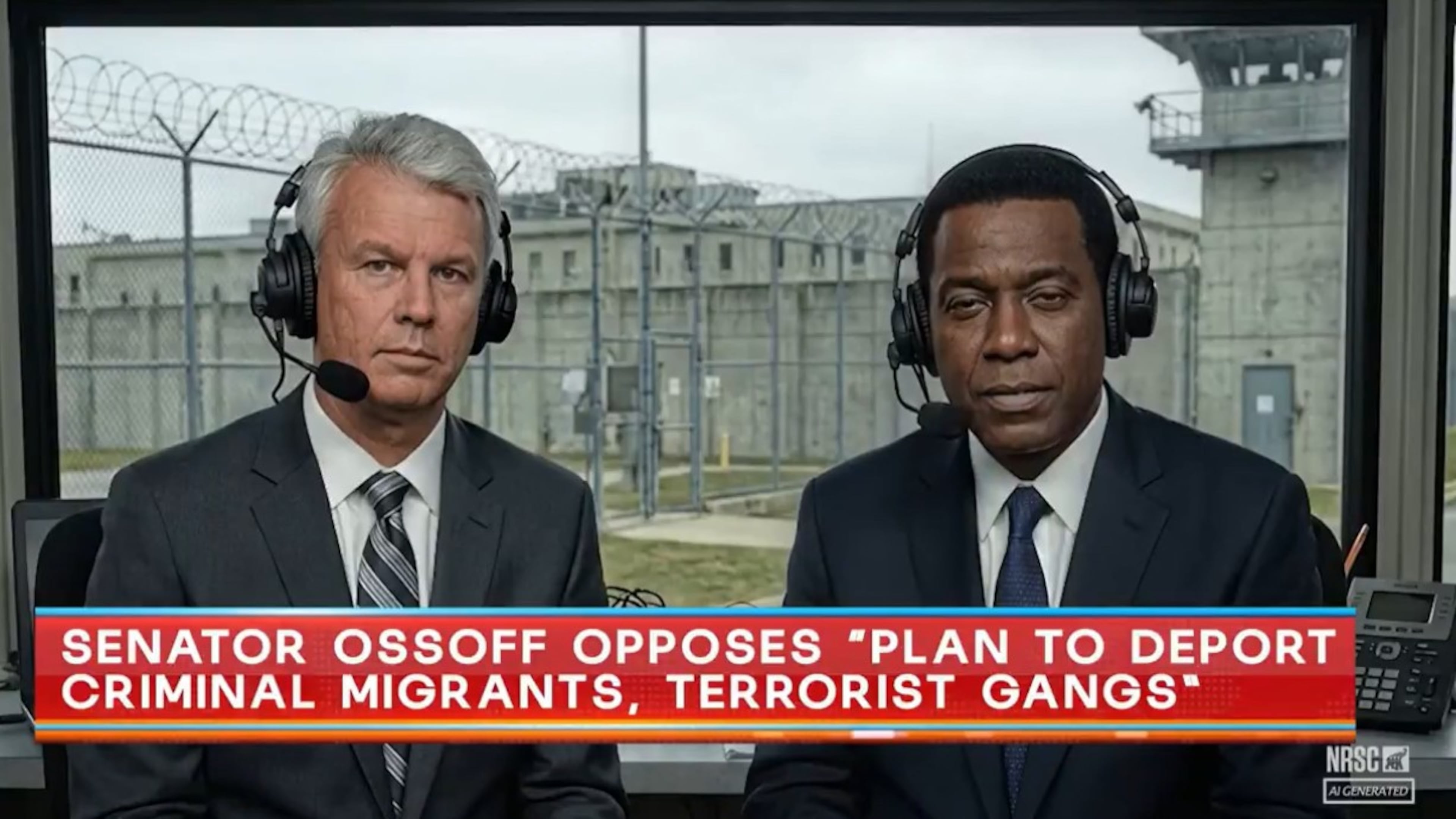

A new AI-generated ad in Georgia’s U.S. Senate race over the weekend was instructive and disturbing. The new spot from the National Republican Senatorial Committee targeted Democratic Sen. Jon Ossoff and was timed to coincide with the University of Georgia’s appearance in the SEC football championships on Saturday night.

The ad stars two AI-generated “sportscasters,” who look perfectly real to the naked eye. But instead of sitting in front of a football field, they’re sitting in front of an immigration detention facility.

“Let’s meet the illegals on Team Ossoff,” AI bot No. 1 says before flashing AI-generated videos of undocumented immigrants recently charged or convicted of violent crimes, whom the announcers wrongly say Ossoff wants on his “team.”

A small disclaimer at the bottom of the video notes that artificial intelligence was used to create the ad, as does the press release that accompanied the release of the ad. But a Newsmax story about the ad the next day made no such disclaimer, writing only, “NRSC Ad Slams Jon Ossoff for Backing Violent Illegals.” It took just one stop on the internet for the truth about the AI ad to disappear altogether.

The NRSC ad followed a different AI-generated ad from Georgia U.S. Rep. Mike Collins’ Senate campaign that created a deepfake version of Ossoff saying things about the recent government shutdown that he never said. In the governor’s race, an outside group supporting Attorney General Chris Carr used AI to create two “actors” in an ad against Lt. Gov. Burt Jones, without disclosing the fact that the video was entirely fake. “What’s real are the facts,” a spokesman for the PAC said, which isn’t really the point here.

Left unregulated, expect Georgia campaigns to also soon use AI the same way a Democratic U.S. House candidate in Pennsylvania did in 2024, when she deployed an AI chatbot named “Ashley” to make live calls to voters for her campaign. “Ashley” was trained to have full conversations with voters and often changed their minds in the course of their phone calls.

A recent study by researchers from Stanford and the London School of Economics found that AI chatbots were significantly more persuasive in swaying political opinions than traditional political ads. But the study also found the chatbots were prone to make up facts and lie to voters when left to their own devices. The bots learned quickly that misinformation is more effective than the truth.

Truly, Pandora’s box has nothing on this situation.

Apart from the risks of AI deepfakes and chatbots involved in political campaigns, the dangers from unregulated AI are especially real for children online. On Sunday night, CBS’s “60 Minutes” detailed numerous suicides among children and teenagers after they befriended unregulated online AI characters and chatbots.

Parents described their 13-year-old daughter’s suicide after multiple AI characters convinced her to take explicit photos of herself. Other cases have included AI chatbots explaining to children in detail how to kill themselves.

I’ve written before about an effort by two Republican House members, Todd Jones and Brad Thomas, to put up basic guardrails in the state for AI- generated content in political campaigns. The General Assembly reconvenes next month, and the Georgia Senate should take up their bill and pass it in time to make a difference in the 2026 elections.

Twenty-six other states have already acted to regulate AI in political campaigns, including Alabama, Florida and Texas. Alabama, y’all. It’s not impossible.

Outside of campaigns, there are more areas where lawmakers need to act quickly to establish safety standards for AI-generated content, especially when it comes to kids online.

Because Congress has failed to pass any basic AI safeguards or protections, the attorneys general of North Carolina and Utah created a new bipartisan task force last month to help states to identify emerging dangers from AI and to suggest ways to protect the public as AI accelerates. But Georgia leaders need to act, too.

Unfortunately, the biggest challenge to protecting Georgians from AI may be President Donald Trump, who signed a series of executive orders earlier this year limiting federal regulations on the AI industry. In July, an early version of Trump’s tax and spending bill also included a 10-year moratorium on states passing their own AI protections, which was stripped from the bill at the last minute.

On Monday, he wrote on Truth Social that he’ll sign yet another executive order this week to ban states from passing their own laws regulating the life-changing technology.

“There must be only One Rulebook if we are going to continue to lead in AI. We are beating ALL COUNTRIES at this point in the race, but that won’t last long if we are going to have 50 States, many of them bad actors, involved in RULES and the APPROVAL PROCESS,” he wrote.

But Trump did not say what his “One Rulebook” would include to protect consumers, voters or children while he’s blocking states from acting. Georgia leaders should fight this in any way possible. The American auto industry didn’t argue that states should be banned from enacting speed limits or seat belt laws. And the AI industry should not get a free pass from states looking to create basic protections for their residents.

The children, communities, and campaigns that Georgia lawmakers save by putting up basic AI guardrails in Georgia may be their own, and they’ll certainly be yours, too.